Cracking Causality in Complex Policy Contexts

| 17 January 2022 | QuIP Articles

This blog was originally written by Professor James Copestake for the Centre for Qualitative Analysis at the University of Bath, and is republished here with permission. James Copestake is a Director of Bath SDR, and the original Principal Investigator on the research which produced the Qualitative Impact Protocol.

One way or another, we all struggle with the challenge of making credible causal claims. Some seek objective truth through rigorous experimentation and statistical inference. Others doubt whether true causal explanations can ever be disentangled from the biases and interests of those who expound them. Many of us sit somewhere in between these extremes. Judea Pearl reminds us that most causal claims based on statistical regularities also entail making theoretical assumptions, and that the best science makes this explicit. Roy Bhaskar and critical realists encourage us not to let our epistemological struggles with the complexity of reality deter us from agreeing on better approximations to it.

My own interest in causality emerged while conducting research into the drivers of poverty and wellbeing in Peru. At about the same time Abhijit Banerjee, Esther Duflo and Sendhil Mullainathan were starting to talk international development agencies into investing more heavily in impact assessment based on randomized experiments. It was not hard to find fault with this randomista bubble, and many have done so, including Nancy Cartwright and Angus Deaton. My own response was to ask the following: why are qualitative approaches to assessing the causal effects of development interventions failing to attract similar interest and investment? Jump forward a decade, and here is an outline answer. It comes in two parts.

The first is methodological. Confronted with a bewildering range of approaches many would-be commissioners are confused about how to distinguish between good and bad qualitative causal research. Doing so by relying on academic reputations gets expensive and is hard to scale up. In search of better alternatives, I embarked on collaborative action research aiming to promote more transparent qualitative causal attribution and contribution analysis. The strategy was to develop, pilot and share what became QuIP – not as a ‘magic bullet’, but as a transparent and practical example of what making qualitative causal claims in complex contexts entails and could deliver. The research took three years, and turned out to be only the start: five more years – and counting – of further action research have followed, organised through Bath Social and Development Research which is a spin-off University social enterprise. This ongoing action research has now delivered more than fifty QuIP studies across twenty countries, and has trained over a hundred M&E practitioners to use QuIP in their own work around the world.

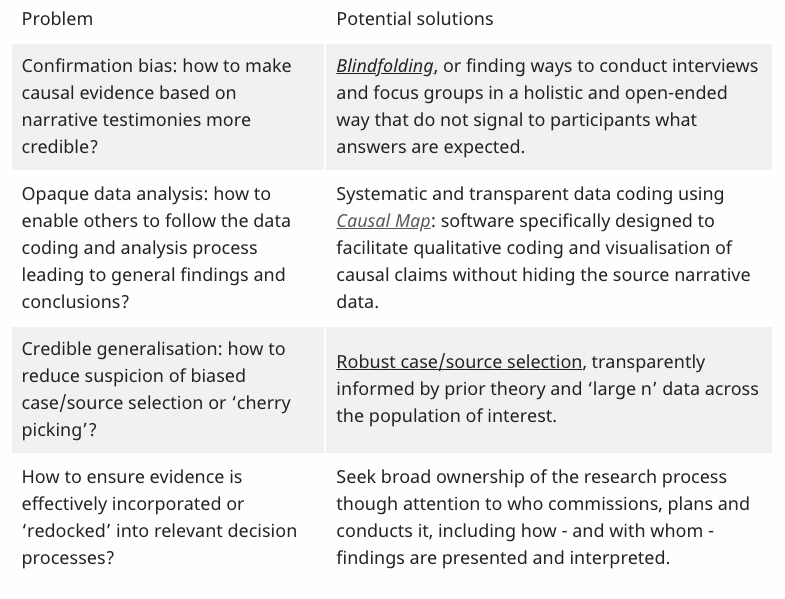

It is beyond the scope of this blog to explain everything we’ve learnt along the way, but the table below picks out four key methodological problems we have sought to address through this work.

The second part of the answer is socio-political, and concerns norms about what constitutes credible and useful evidence among those who fund and manage development programmes and evaluations. These norms tend to favour relatively simple but quantified evidence of causal links that are consistent with a technocratic and linear view of development programming – e.g. that investing more in X leads proportionately to more of outcome Y. This partly reflects a bid for legitimacy through imitation of overly sophisticated research methods. Spurious precision is also conflated with rigour; and bold ‘X leads to Y’ claims tend to trump findings that are harder to explain quickly. In contrast, good qualitative and mixed methods research reveals more complex causal stories and unintended consequences. These may be useful in the longer-run, but have the more immediate effect of undermining thin simplifications, thereby making life politically and bureaucratically more difficult.

This blog ends – appropriately enough – with a causal question. How much can methodological innovation on its own contribute to raising demand for qualitative causal research? A practical if difficult answer is that the methodological and socio-political challenges interact so closely that we have to tackle them together. As qualitative researchers, we need to use what limited influence we have to resist wishfully simplistic and hierarchically imposed terms of reference, and to foster more open and collaborative learning.

Comments are closed here.