How many interviews are enough? (updated)

| 7 March 2025 | News

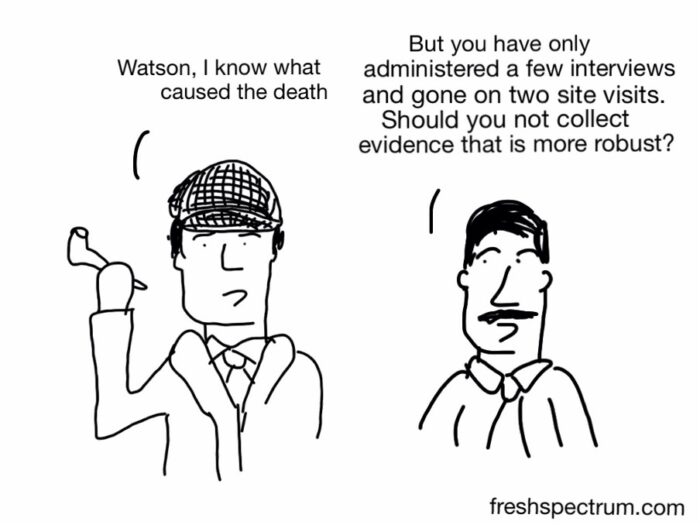

This blog had slipped out of sight on our news page, and given that we are so often asked about sample sizes, I thought it was a good time to update it, with a few changes and additional references. Thank you again to the wonderful Chris Lysy for the cartoon, which we republish with his permission. Enjoy!

A typical QuIP evaluation study is based on a ‘unit’ of 24 interviews (and sometimes 4 focus groups), scaled up in units to 48, 72 and 96 (being the largest we have done on some complex and large projects). This invites a lot of questions! Why start with 24? Is that enough interviews? How can that be representative? This blog attempts to answer this simply, but if you want more, check out our resources on case selection, including a journal article by James Copestake discussing our approach to sampling in more detail, and there are other useful articles referenced at the bottom of this post.

All qualitative research is based on small sample sizes, mostly not aiming to be representative. However, it’s important not to discount the value of the findings from a small sample if selected according to some robust principles. The purpose of a QuIP isn’t to collect average statistical data on impact, it is to understand how and why a project is or isn’t achieving impact – the causal mechanisms which are associated with positive (and negative) outcomes. We are looking for evidence to add to what we already know, acknowledging that we are usually starting from a point of some rather than zero knowledge.

Most projects we are evaluating will have some monitoring data or feedback from staff on the ground which give an idea of what is and isn’t working – or places/groups where things seem to be working better/worse than others. That information and the questions it precipitates are a good starting point for any sample selection. Rather than trying to speak to an average representative sample, why not speak to the people who are more likely to reveal interesting things about how and why an intervention is working well for some people and not for others? This should add information to what we already know and help to build our understanding of how and why change is or isn’t happening, and how closely this relates to the project’s theory of change.

If your main purpose is to confirm a hypothesis (e.g. that at least 75% of respondents have benefitted from a project) then the number of randomly selected cases needed to increase your confidence from, for example, 50% sure to 95% sure, can be surprisingly small. “Dion (1998) uses Bayes’ Rule to demonstrate that if a researcher starts out indifferent about whether X is a necessary condition for Y, and just five randomly selected country case studies all reveal X did indeed precede Y, then confidence in the causal claim rises from 50% to 95%.” Copestake (2020). The more sure you are to start with the smaller the number needed to test prior thinking. If your main purpose is more exploratory, then consider what is needed for ‘saturation’ sampling to maximise the potential to learn about a range of drivers of change, see below for more.

Why any number, and why 24? Three main reasons… First, a number – we wanted to have a standard reference point for people when thinking about QuIP. As well as the following two reasons, it worked well for timing and budget in the initial pilots (two researchers collecting data for one week), and has stuck from there. But we have also interviewed 25, 30 and (often) 36 people – so it’s not a hard and fast rule, just a good starting point – iterate based on your context.

Second, 24 is such a beautifully divisible number – oh so many useful factors for stratification! We often stratify our sample purposively, for example age, gender, location. 24 enables you to, for example, interview 12 people in each of two locations or 8 in three locations (to control for any unexpected factors which may be pertinent to just one place), and split that whole sample between men and women and older and younger respondents. Using sub-clusters of 6, 8, 12 gives you a lot of flexibility, hence why we often end up with 36 interviews where there are too many points of stratification for 24.

Finally, saturation. Research in this area differs; Guest, Bunce, and Johnson (2006) found that thematic saturation could be reached in 12 interviews, whereas Hennink, Kaiser and Marconi (2017) argue that meaning saturation requires 16-24 interviews (the point at which no further dimensions, nuances, or insights of issues are identified). Hagaman and Wutich (2017) revisited Guest et al’s study and showed that findings across different sites required 20-40 interviews to reach saturation. Taking into account the previous points about logistics, budget and divisibility, we have found that 24 fits well with this research into saturation. Our own data (from almost 100 evaluations) shows that qualitative data analysis of much more than approximately 20 interviews offers little in the way of additional new codes or information. The rate of diminishing marginal returns slopes up steeply after this point if your sample is a relatively homogenous group that share similar experiences. 24 interviews allow us to provide a cost-effective and timely evaluation that explores the experiences of a certain group, allowing for some anomalies and not-quite-so-useful interviews. If you have very different types of groups/places/interventions – then scale up.

BUT, I will repeat, the purpose of a QuIP isn’t to collect average statistical or representative data on impact, so the words of Kizzy Gandy (2024) are worth remembering before you are tempted to try to saturate all possible avenues… and her excellent article is also worth reading!

“It is implicitly assumed in thematic saturation studies that saturation is always important. This is true for generating generalisable knowledge, but evaluators tend to prioritise program-specific performance insights. Generalisable knowledge is associated with a positivist paradigm whereas evaluators typically move between positivist, postmodern, and constructivist paradigms across different key evaluation questions and draw on multiple sources of data. Therefore, qualitative data is typically used by evaluators to develop a depth of understanding rather than breadth, and sometimes qualitative sample sizes as low as one can be justified.”

Referenced articles

Comments are closed here.