QuIP and FairVoice

| 5 January 2023 | News

Last year, The Fairtrade Foundation commissioned Bath SDR to evaluate the impact of their work with cocoa farming cooperatives in Côte D’Ivoire. Fairtrade wanted an accurate portrayal of everything that was affecting farmers within these cooperatives to help inform organisational and programme decisions. By asking farmers about any changes in their lives, rather than focusing directly on the project, QuIP was able to frame Fairtrade’s interventions in the wider context of factors such as climate change and market forces.

This QuIP study was a new way of working for all parties involved (Bath SDR, Fairtrade and On Our Radar) and we learned a lot through this process. This blog is an opportunity to share some of our reflections on the key benefits and challenges of combining these methods. A case study is also available here.

Background

The study looked at two Fairtrade cocoa farming cooperatives in Côte D’Ivoire. Across four months 42 respondents were asked a series of questions covering four core domains relevant to the programme: improving cocoa incomes, environmental protection, diversification of income and their cooperative membership.

These questions were asked through FairVoice, a unique mixed method solution for remote data collection, that enables farmers and workers to directly share their experiences through their mobile devices which are received into a central dashboard. This data collection tool was designed and developed by Fairtrade in partnership with On Our Radar. This collaborative study experimented with incorporating elements of the QuIP methodology into the FairVoice approach.

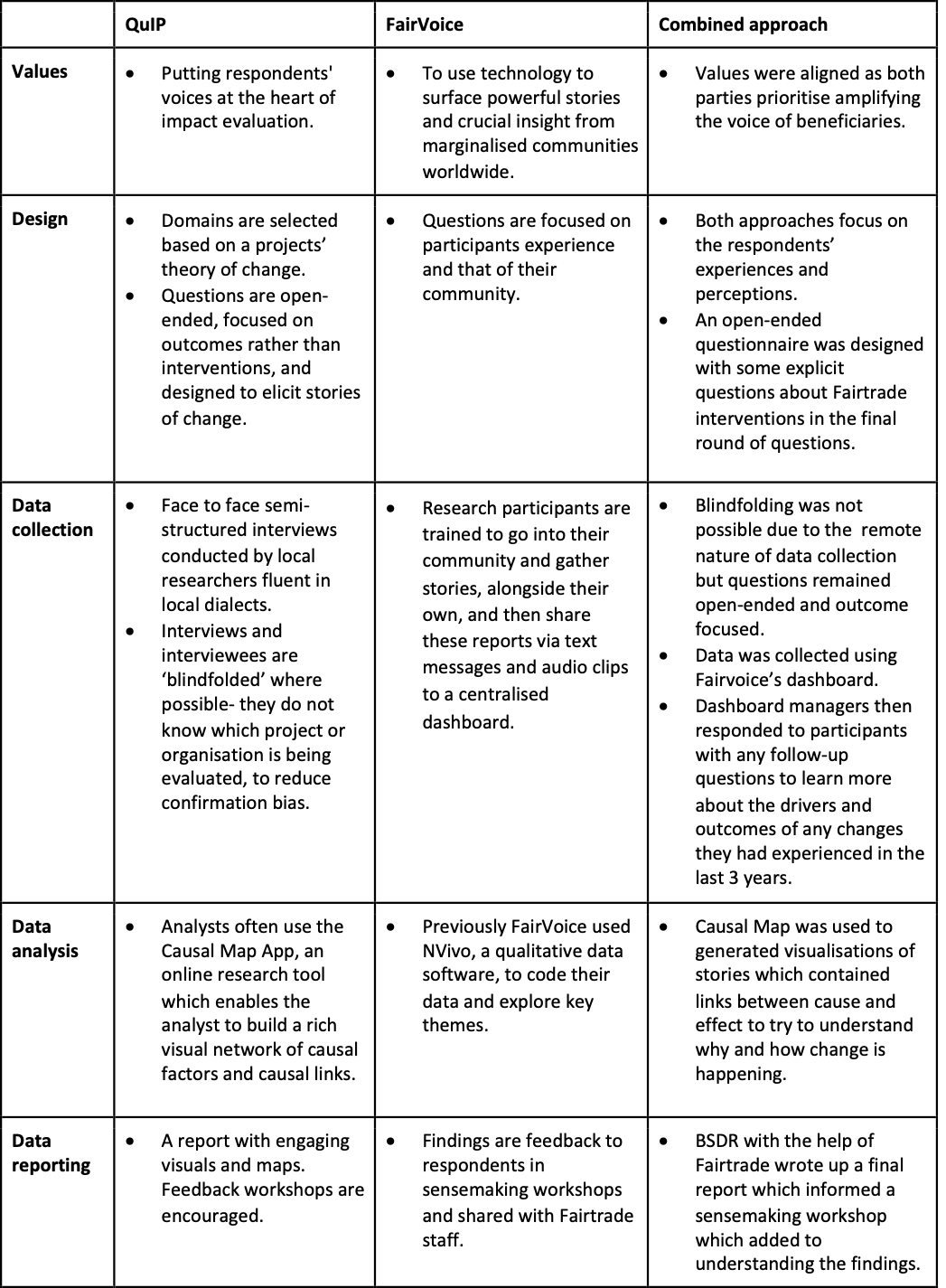

The table below provides an overview of the main features of the two approaches and how they were combined for this project:

Bath SDR Reflections

QuIP data collection often involves translation; typically local researchers conduct the interviews in the local language/dialect and then transcribe in English (or into the local language which is then translated). So the transcripts that are coded and analysed are the respondents’ voices as written by the interviewer.

One benefit of FairVoice is that the responses are typed out by the respondents themselves in their own words in their own language. QuIP aims to reduce confirmation bias through blindfolded interviewers [1] and goal-free questioning, however, by also allowing participants to tell their own stories in their own words potential interviewer bias or misrepresentation is also reduced.

However, in this case, analysis and reporting were conducted in English so respondents’ words still had to be translated (and were translated after the fact by On Our Radar staff). This presented its own challenges as the dashboard managers were unable to translate with the normal aids of tone of voice or gestures that often assist QuIP interviewers. For example, in one instance ‘also’ was mistranslated as ‘so’ which altered the chain of causal links. This has highlighted the challenges of mistranslation and encouraged Bath SDR to reflect on the importance of getting translation right, however the data is collected.

Another key benefit of bringing the two methodologies together was the focus on change that QuIP brings and the way in which domains are used to structure the questions used by FairVoice.

Traditional QuIP interviews normally last between 60 and 90 minutes, which is a significant time commitment for many respondents, whereas FairVoice allows participants to respond in their own time and using their preferred medium. This gave farmers longer to reflect on the questions and they could contribute at a time that suited their schedule. FairVoice invited participants to ask interview questions to others in their community, this snowball effect encouraged people who may not have normally taken part in research to share their experiences.

However, individuals were not always as responsive as in a face-to-face interview, some questions were ignored by participants and many replies lacked detail. Responses peaked with 31 respondents out of 42 sending in responses to the open-ended questions in the first round and were lowest in the second with only 19 respondents [2]. This is perhaps understandable as, however friendly the dashboard managers were, it is much easier to ignore a text than a person sitting in front of you.

Examples of follow-up questions used by the Dashboard managers (staff who interact with the incoming text messages via a central system and can send follow up messages back):

Capacity constraints for the dashboard managers was an issue, particularly in the first few rounds where follow-up questions were vital. Follow-up questions are the ‘but why?’, ‘how?’, ‘what do you mean by that?’ questions that give QuIP studies their depth. There were also some unique technology challenges which impacted on tracking the conversations in the dashboard.

Dashboard managers weren’t always able to ask all the necessary questions to get the quality of data usually collected in traditional QuIP studies.

This meant coding the data in the Causal Map app was sometimes challenging, as the detail needed to create causal chains was sometimes missing. For example, statements about income diversification often omitted the drivers and outcomes of new business and instead described the business itself. We used ‘plain coding‘ in such cases as it allowed the analyst to capture information, like details about respondents’ businesses, which was relevant to the study but did not contribute to a casual chain. This enabled more information to be gleaned from the interviews but were not a substitute for in-depth causal stories.

That said, using casual mapping to analysis the data from FairVoice provided useful visualisations of the results that has been very beneficial for sharing findings with a number of Fairtrade stakeholders, including the participants themselves.

Conclusion

This project provided valuable learning for all organisations involved and encouraged Bath SDR to reflect on our practices, in particular the importance of accurate translation and the use of follow-up questions. FairVoice’s remote data collection method had several benefits as it was less demanding for beneficiaries and allowed respondents to reply in their own words. We would welcome entering into a similar collaboration again in future to build on this experience.

Footnotes

[1] Where possible both interviewer and interviewee have limited to no knowledge of the programme or the hypotheses being tested and work completely independently of the project team and commissioning organisation.

[2] The response rate for non-codable closed questions was higher.

Comments are closed here.