Ten years of stitching together the QuIP patchwork

| 16 September 2025 | News

The end of this year will mark ten years since James Copestake and I set up Bath Social & Development Research, as a way to continue experimenting with the QuIP – a distinctive approach to qualitative impact evaluation. This was also an experiment in ‘mainstreaming’ research conducted under a UKRI/DFID grant to the University of Bath beyond the grant funded period. The official incorporation of the non-profit consultancy Bath SDR was in December 2015, following six months of intense research and development (supported by Innovate UK). Since then, we have worked on or supported over 100 different evaluations in more than 30 countries, and trained more than 300 evaluators and researchers.

(Photo: James Copestake, Steve Powell & Fiona Remnant battling perspective at UKES 2025, Glasgow)

QuIP (Qualitative Impact Protocol) was developed in response to a specific and practical need for a relatively fast, simple, rigorous and affordable qualitative method for small and medium sized NGOs to understand whether and how their activities contributed to the change they were expecting. It was consciously eclectic in stitching together a patchwork inspired by a range of existing theory-based evaluation methods, including (of course) Most Significant Change, and our original briefing paper lays out where we borrowed from and departed with from Contribution Analysis, Realist Enquiry, Process Tracing and Outcome Harvesting. We consciously adopted the name QuIP to clearly label our approach, but never in the belief that this this would supplant other methods, suit all purposes, or become a fixed standard. Indeed, the past ten years have felt like an ongoing action-research project – trying out different combinations of approaches, mixing with other methods in larger evaluations, adapting to context, innovating as we went along, and saying no when we felt QuIP wasn’t the right approach. The co-development of Causal Map to help promote causal mapping in evaluation was a further example of this. But as we reach this (fairly arbitrary) milestone, and recognition of the QuIP as an ‘approach’ on the Better Evaluation website, we need to guard against anything being set in stone.

The very apt term ‘bricolage’ has gained popularity since Apgar and Aston’s 2022 paper – The Art and Craft of Bricolage in Evaluation, and brilliantly describes the experience of most qualitative evaluators who “often only adopt certain parts of methods, and skip or substitute recommended steps to suit their purposes.” We know from our own experience, and from the many people who have shared their use of QuIP with us, that this also applies to the steps involved in QuIP. For many, the goal-free, outcomes-based approach to interviewing (with or without blindfolding) is the main aspect they take away, others use the causal approach to coding and analysis with existing documentary and interview evidence. There are clear differences with some other related methods (contradictions are common in families!) so we might warn people off certain adaptations – such as asking people for direct feedback about an intervention as you might do in a Realist Evaluation – but encourage others, such as using observable outcomes collected from a range of sources using Outcome Harvesting as the starting point to design the domains of change you would like to explore (even substantiate) with QuIP respondents.

In the spirit of continuing action research, and as we review the QuIP patchwork, now more than ten years old, we would love to hear from any users who have stories to share of experimentation and bricolage. Please get in touch with us, whether to share a snippet or propose a guest blog.

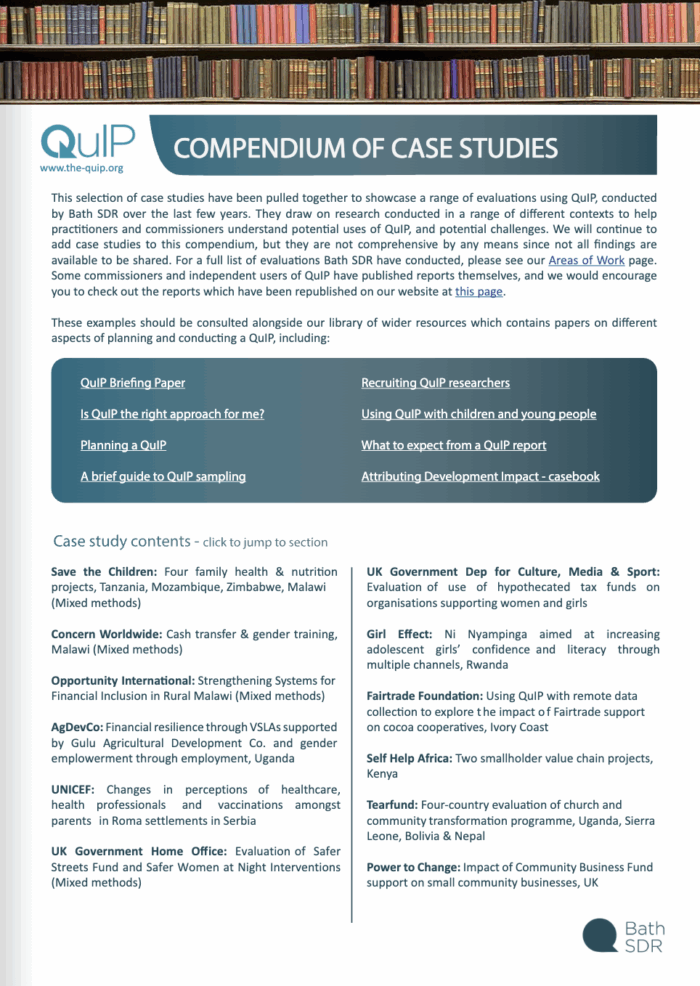

In the meantime, we have put together a collection of case studies and links to useful documents, and don’t forget our large collection of Resources available to all.

Comments are closed here.