Qualitative research in a pandemic – what we learned in 2020

| 23 February 2021 | News

Almost a year ago we published a blog on the back of a virtual discussion held with researchers from the Bath SDR network and beyond about the challenges and opportunities facing qualitative researchers conducting fieldwork during the Covid-19 pandemic. Despite the uncertainty about procedures and practicalities, we committed to ‘try, learn, fix, and try again’. Now, after a year’s worth of trying and learning, we wanted to share some reflections from our experiences of adapting and developing the QuIP approach.

With the safety of our researchers and respondents at the front of our minds, we initially pressed pause on data collection and waited cautiously to see how the situation would develop in each of the countries where QuIP studies were planned. All of Bath SDR’s researchers are based in-country so there was no concern about international flights, but lockdown still restricted national movement, at least initially. By the end of 2020, as local lockdowns were eased, over 270 in-depth interviews went ahead across nine different Bath SDR projects. Some took place virtually via web-based applications/over the phone, but most of the interviews were conducted in person – socially distanced.

With the safety of our researchers and respondents at the front of our minds, we initially pressed pause on data collection and waited cautiously to see how the situation would develop in each of the countries where QuIP studies were planned. All of Bath SDR’s researchers are based in-country so there was no concern about international flights, but lockdown still restricted national movement, at least initially. By the end of 2020, as local lockdowns were eased, over 270 in-depth interviews went ahead across nine different Bath SDR projects. Some took place virtually via web-based applications/over the phone, but most of the interviews were conducted in person – socially distanced.

Continuing conversations

Whilst face-to-face interviews were on hold, the conversations with commissioners and researchers certainly weren’t. Keeping conversations going and being open and transparent about the new challenges better enabled us to hit the ground running when it was safe to do so. Listening to the expertise and the concerns of our local researchers was essential to deciding when and how to proceed.

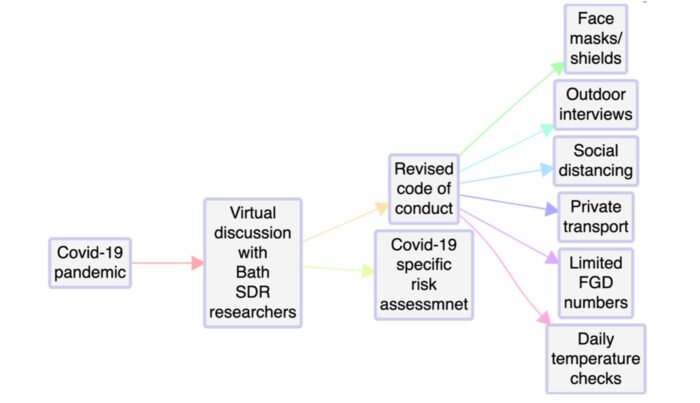

We prepared a Covid-19 specific risk assessment to be completed collaboratively by the lead researcher and project manager for each study. We also updated our code of conduct for fieldwork and Bath SDR was responsible for contacting each researcher individually to make sure they were comfortable with the proposed plan. Each team then allocated a Covid-19 monitor to ensure rules, regulations, and additional measures were strictly followed.

The diagram below illustrates some of the practical measures put in place to protect interviewers and interviewees:

Testing times, and timelines

In addition to the practical and logistical issues, conducting research during the pandemic also presented methodological challenges for the QuIP approach.

We asked our lead researchers whose teams conducted face-to-face interviews during 2020 to contribute to this blog post. Many thanks to Kirorei Kiprotich, Peter Mvula, and Clement Sefa-Nyarko who all provided detailed feedback reflecting on their experiences of what worked well and what we can build on in the future.

Whilst our commissioners did want to capture stakeholders’ experiences during the pandemic, we had to be careful in the design phase not to lose sight of the original purpose of conducting the research – to gather stories of change to understand and evaluate the impact of a particular programme.

QuIP-style questions are mostly open-ended and focused on intended outcomes, rather than specific interventions. Respondents are asked to reflect on whether anything has changed in a particular area of their lives over a defined recall period (typically before and after an intervention). Our concern was that it might be a struggle for respondents to reflect on outcomes brought about by the programme (and other drivers) and that narratives would be particularly affected by recency bias with regards to Covid-19.

It was clear that we needed to find a way to distinguish the different time periods we were interested in covering – i.e. before/after the intervention and during the pandemic specifically. We played around with ideas with our research teams, discussing whether it would work better to ask a Covid-related question at the end of each outcome domain, or to conclude with a section dedicated to covering more recent changes. We piloted the latter, alongside the use of visual timelines, similar to the example below.

The researchers felt that in most cases the recall period wasn’t an issue. Whilst the memories of Covid-19 related changes were fresher in their minds, through using the timelines and repeatedly referencing the established recall period or specific months/events, respondents were able to share their broader experiences and perceptions of change. As time goes on, and the impact of Covid-19 is perhaps more pronounced and long-lasting, this approach may need to be reconsidered. In some of the studies, the closed questions (used to draw discussions to a close in each domain and summarise perceptions of change) were clearly affected by the attempt to straddle two recall periods. Thankfully, there is always an open-ended follow up to the closed summary question, and these responses indicated that some respondents were responding based on their overall perceptions of change pre-Covid, whilst others were reflecting on the effects of the pandemic. In future studies, where closed questions are used, they should be more specific about the timeframe to avoid any confusion.

Another potential problem was that researchers might struggle to build the necessary rapport with respondents, with a literal (2m) distance between them. All of our researchers said that clearly explaining the setup to respondents in advance helped, but as these practices are now commonplace and their importance generally understood, they did not particularly affect the rapport during interviews. The Kenya team perceived that their strict adherence to the measures gave respondents more confidence to take part and researchers in Ghana commented that respondents were happy to receive the PPE, although one or two felt slightly uncomfortable wearing a mask as they needed to speak louder than normal to be heard.

Until data collection began, it wasn’t clear whether the pandemic would have an effect on response rates, but most of our teams didn’t have any problems reaching the target sample size. In Kenya and Ghana, a couple of respondents asked to speak over the phone/via WhatsApp video call rather than in person. The researchers felt that in cases where respondents have sufficient access to technology that these platforms could support wider data collection in the future. However, there are some QuIP contexts where this would not be possible, for example, the team in Malawi highlighted that the communities they visited wouldn’t have been able to participate remotely due to poor networks and limited access to technology.

We asked whether there were any opportunities that had arisen due to the pandemic which the researchers would like to see developed further. In addition to the use of technology during interviews, they commented that virtual training was something new to them, which they had would have never contemplated doing before Covid-19. Bath SDR typically trains new teams in person, with our research leads in each country provide top-up training after the first QuIP study. However, we were able to adapt and moved everything online with a mix of self-study sessions, live discussions, and practical exercises. The feedback so far has been positive and the quality of data collected has remained high, providing reassurance that this approach is effective.

After a tumultuous year of incredible advances in our abilities to share information and guidance through the ether (not to mention the simultaneous move to analysis in Causal Map!) there are certainly changes to the way we train and communicate which we will continue to use, and experiments with online interviewing have been modestly successful – most notably with respondents who are representing an organisation rather than personal interviews. However, we have worked with our research teams to prioritise face to face interviews wherever possible, and we continue to believe that this is the best approach to elicit really reflective stories of change. The personal interaction between talented interviewer and willing respondent still cannot quite be replaced by online surveys, however interactive.

We are always looking into ways to further strengthen training delivered online – so please get in touch if you have any experiences/best practices you’d like to share with us!

Unfortunately you are not able to comment on news articles on this site (there are gremlins in the machine who just won’t leave!), so please interact with us on Twitter @bathsdr or contact us via the details on our website.

Comments are closed here.